High performance computing (HPC) is the use of parallel processing capabilities to solve complex computational or scientific problems. HPC is utilized in a broad range of industries, sectors, and use cases – from data simulations, analytics and modelling, to 3D rendering, geospatial mapping, and AI/ML applications.

A fundamental enabler of HPC is the ability to simultaneously process large datasets in parallel for faster decision making. Traditionally, HPC is achieved by clustering a number of large servers together on-premise. In recent years, an alternative is the use of cloud service providers to meet the on-demand scale for sporadic or short bursts of processing. While both HPC options have their pros, neither of them work well in remote use cases at the edge where connectivity is limited.

Why deploy high performance computing to the edge?

Across many industries, and market segments there are vast datasets available at the edge. Due to the volume of data it makes sense for the data to be processed locally. This is especially true where low latency decision making can make a difference on a positive outcome, some example use cases are:

- Government: Up to date situational awareness e.g. real-time intelligence gathering and insights

- First responders and public safety: Emergency preparedness e.g. mapping and weather modelling

- Heavy industry (mining, oil and gas): Health and safety e.g. material science, quality and environmental analysis

- Transportation: Security e.g. Real-time tracking, CCTV and safety monitoring sensor fusion

A further advantage of deploying HPC to the edge is to reduce and/or eliminate the cost associated with supporting and maintaining an always-on backhaul network. In remote areas, satellite may be the only connectivity option and the cost per MB/s sent quickly adds up.

Rugged and Modular HPC

There are many constraints to consider when deploying HPC to the edge. Typically these include power, weight and size. However, to withstand whatever the edge throws at the HPC, especially in mobile use cases (as highlighted above), it needs to be rugged.

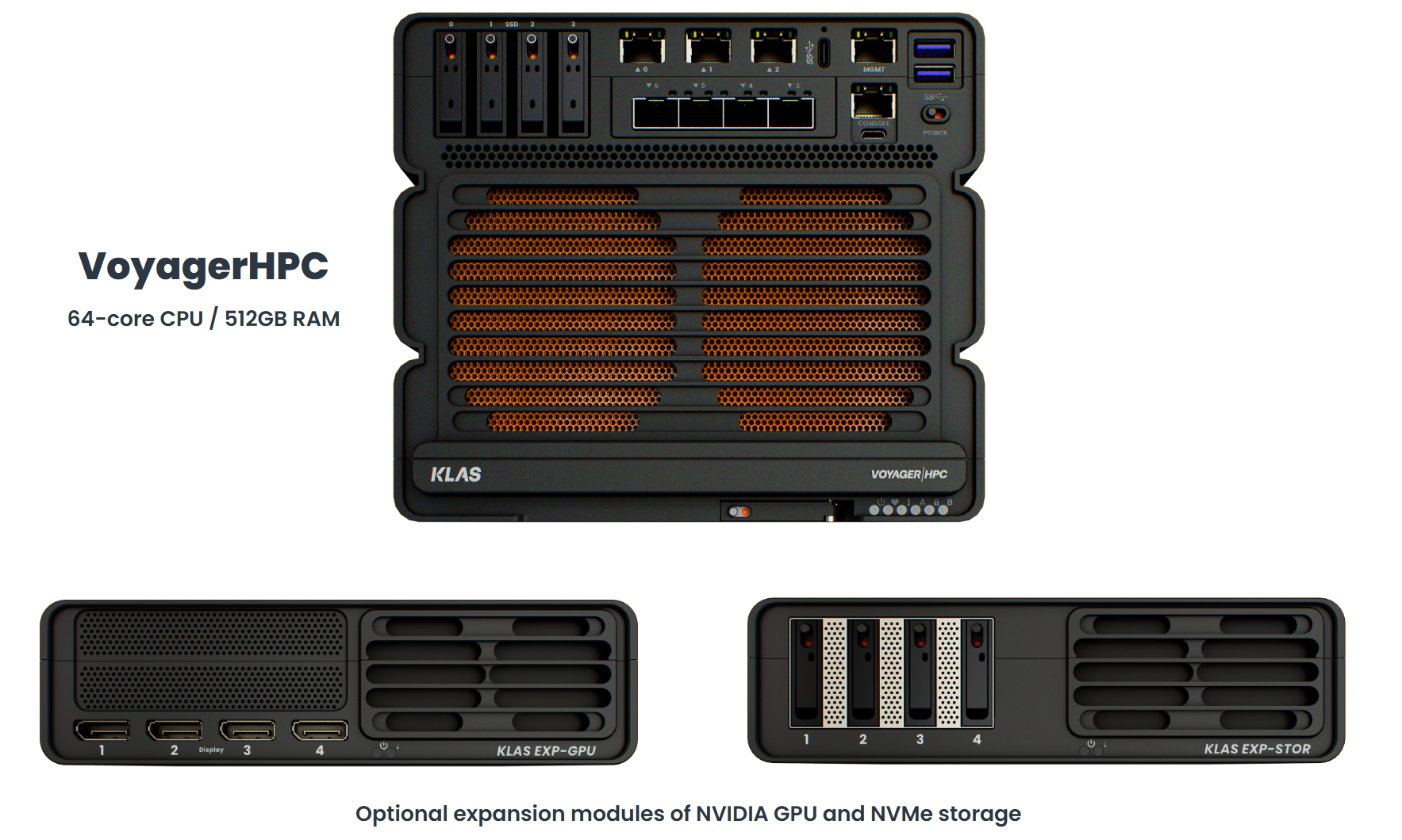

VoyagerHPC from Klas is the only HPC designed rugged for the edge. VoyagerHPC’s size and weight make it highly portable. In addition, VoyagerHPC supports expansion capabilities of Nvidia GPU and NVMe storage making it more versatile when it comes to HPC requirements for the edge.

Figure: VoyagerHPC is a modular HPC, designed to support the widest range of edge use cases

A major advantage of VoyagerHPC’s discrete GPU expansion module (versus built-in), is the flexibility and economical savings when it comes to deploying HPC with GPU at the edge. A simple question to ask – is the GPU at the edge redundant after AI training, and scaled storage is more beneficial?

Future-proofed HPC

With VoyagerHPC gain a generative leap forward in edge compute capabilities. For the first time ever, achieve and do more in the smallest form factor HPC ever designed for the edge. With VoyagerHPC unlock and access new intelligence for decision advantage at the edge.

With VoyagerHPC and the Nvidia GPU expansion module, re-imagine how AI models and ML training are achieved. Facilitate developers with a HPC and GPU in a centralized location. Keep AI models and inference accurate through continuous training, by moving the HPC and GPU to the edge, and validate the AI models with real-world data.

In addition to the cost savings, the expansion capabilities of VoyagerHPC means the HPC is future-proofed for tomorrow’s needs. As an edge technology specialist, Klas continues to evolve, and offers custom development support for its HPC expansion module range. With Klas secure and maximize the overall return of investment in HPC at the edge for years to come.

To learn more about VoyagerHPC and expansion capabilities check out our blogs, datasheets and video overviews:

Datasheets and Videos:

1. VoyagerHPC – https://www.klasgroup.com/products/voyagerhpc/